The rise of Large Language Models (LLMs) has revolutionized how we interact computer, process information, and build applications. But harnessing this power effectively requires specialized tools and frameworks. Enter PromptFlow, LangChain, and Semantic Kernel – three powerful frameworks that streamline LLM application development. This article explores three leading LLM-based development tools: PromptFlow, LangChain, and Semantic Kernel, comparing their features, capabilities, and ecosystems to help developers choose the best tool for their needs.

Understanding the Basics: What

- PromptFlow: A toolkit focused on the end-to-end LLM app lifecycle. It simplifies prompt engineering, testing, evaluation, and deployment, making it ideal for building high-quality LLM applications. Think of it as a visual canvas for your LLM workflow.

- LangChain: A flexible, modular framework that excels at integrating LLMs with various tools and data sources. Its strength lies in its ability to chain together different components, enabling complex LLM interactions.

- Semantic Kernel: Microsoft’s SDK designed to bridge the gap between LLMs and traditional programming logic. It focuses on orchestrating workflows that involve both AI and business logic.

PromptFlow

PromptFlow, developed by Microsoft, streamlines the end-to-end development lifecycle of LLM-based AI applications. It simplifies prompt engineering, enabling the creation of production-quality LLM apps1. A “flow” in PromptFlow is defined as an executable instruction set that can implement AI logic2. PromptFlow offers a visual interface for designing, testing, and evaluating prompts in real-time3. This is particularly useful for applications like customer service automation, where LLMs interpret customer queries and provide solutions or route them appropriately3.

Key Features of PromptFlow

- Visual Flow Creation: Create executable flows that link LLMs, prompts, Python code, and other tools in a visual workflow1.

- Debugging and Iteration: Easily debug and iterate flows, tracing interactions with LLMs1.

- Evaluation and Metrics: Evaluate flows and calculate quality and performance metrics with large datasets1.

- CI/CD Integration: Integrate testing and evaluation into your CI/CD system to ensure flow quality1.

- Deployment Flexibility: Deploy flows to various serving platforms or integrate them into your application’s codebase1.

- Collaboration: Supports team collaboration, enabling multiple users to work together on prompt engineering projects4.

- Mass Testing and Evaluation: Allows mass testing across multiple inputs and uses various metrics like classification accuracy, relevance, and groundedness to assess prompt effectiveness3.

While PromptFlow excels in its prompt engineering capabilities and provides a robust environment for building LLM-powered applications, it’s important to note that it is inherently static in nature. For more dynamic adaptation scenarios, developers often need to integrate PromptFlow with tools like LangChain or Semantic Kernel to achieve more complex orchestration and flexibility5.

Programming Languages and Frameworks

PromptFlow primarily supports Python6. However, there is ongoing work to expand support to C# and potentially other languages in the future7. It integrates seamlessly with Azure Machine Learning and Azure AI Studio5.

Community and Ecosystem

PromptFlow has a growing community with resources like the PromptFlow Resource Hub, which provides a gallery of use cases and tutorials9. It also offers a VS Code extension for an interactive flow development experience10.

Pricing and Licensing

PromptFlow is an open-source project with an MIT license, allowing for free use and modification10. However, when used within the Azure AI platform, costs may be incurred based on resource consumption, such as flow execution time and compute resources12.

LangChain

LangChain is a framework for developing applications powered by large language models. It simplifies the LLM application lifecycle, including development, productionization using LangSmith, and deployment using LangGraph Platform14. LangChain excels at handling memory, allowing AI to remember previous conversations, which is crucial for maintaining context and ensuring relevant responses16.

Key Features of LangChain

- Modular and Extensible: Provides a modular and composable framework for building applications by combining different components, such as language models, data sources, and processing steps17.

- Memory Management: Allows AI to remember previous conversations, maintaining context and ensuring coherent responses16.

- Integration with Various Models: Integrates with a wide range of language models, including those from OpenAI, Cohere, Hugging Face, and Anthropic18.

- Data Augmentation: Enables language models to access new datasets without retraining19.

- Chains: Chains are the fundamental principle that holds various AI components in LangChain to provide context-aware responses19.

LangChain enhances language understanding and generation by integrating various models, allowing developers to leverage the strengths of each. This leads to improved language processing, resulting in applications that can comprehend and generate human-like language in a natural and meaningful manner20.

Programming Languages and Frameworks

LangChain primarily supports Python and JavaScript21. It offers integrations with various frameworks and libraries, such as Flask and TensorFlow23.

Community and Ecosystem

LangChain boasts a large and active community with extensive documentation, tutorials, and resources24. It also has a dedicated Slack channel and community forum for support and knowledge sharing24.

Pricing and Licensing

LangChain is open-source and free to use under the MIT License21. However, associated services like LangSmith for debugging, testing, and monitoring have usage-based pricing25.

Semantic Kernel

Semantic Kernel (SK) is an SDK from Microsoft that integrates LLMs with conventional programming languages like C#, Python, and Java26. It is enterprise-ready and is being used by Microsoft and other Fortune 500 companies27. SK allows developers to define plugins that can be chained together and orchestrated with AI28. SK excels in its ability to combine natural language with traditional code, making it well-suited for enterprise-grade solutions26.

Key Features of Semantic Kernel

- Plugin Architecture: Allows developers to define plugins that extend the functionality of LLMs by granting them new capabilities or defining the purpose of LLM invocation to a specific topic29.

- AI Orchestration: Enables automatic orchestration of plugins using AI planners, allowing LLMs to generate a plan to achieve a user’s goal28.

- Memory Management: Includes special plugins to remember and store data, maintaining context during tasks30.

- Integration with AI Providers: Integrates with AI models from different providers, like OpenAI and Hugging Face30.

- Native Functions: Provides a framework for creating and cataloging custom code, called native functions, which can be used alongside semantic functions31.

Semantic Kernel includes built-in planners such as the SequentialPlanner, providing developers with tools to manage and execute complex tasks efficiently32.

Programming Languages and Frameworks

Semantic Kernel supports C#, Python, and Java33. It integrates seamlessly with other Microsoft technologies and services, such as Azure and Visual Studio32.

Community and Ecosystem

Semantic Kernel has a growing community with resources like the Awesome Semantic Kernel repository, which provides a curated list of articles, tutorials, and tools34. It also has a dedicated blog and community forum for discussions and support35.

Pricing and Licensing

Semantic Kernel is open-source and free to use under the MIT License36.

Comparison of LLM Development Tools

The following table provides a detailed comparison of PromptFlow, LangChain, and Semantic Kernel across various key features:

| Feature | PromptFlow | LangChain | Semantic Kernel |

|---|---|---|---|

| Primary Focus | Prompt engineering and flow orchestration | Building LLM-powered applications | Integrating LLMs with conventional programming languages |

| Key Strengths | Visual flow creation, debugging, and evaluation | Modularity, memory management, and integration with various models | Plugin architecture, AI orchestration, and memory management |

| Programming Languages | Primarily Python, with ongoing work to support C# | Python and JavaScript | C#, Python, and Java |

| Supported Frameworks | Azure Machine Learning, Azure AI Studio | Flask, TensorFlow | Azure, Visual Studio |

| Community | Growing community with resources and a VS Code extension | Large and active community with extensive documentation and support | Growing community with curated resources and a dedicated blog |

| Licensing | Open-source (MIT License) | Open-source (MIT License) | Open-source (MIT License) |

| Ease of Use | Can have a steeper learning curve, especially for complex flows | Relatively easy to learn, especially for Python developers due to extensive documentation and community support | Can have a learning curve associated with understanding and implementing plugins and planners |

| Specific Use Cases | Well-suited for tasks involving prompt variations, evaluation, and optimization, such as customer service automation and question-answering systems | Ideal for building a wide range of LLM applications, including chatbots, question-answering systems, and text summarization tools | Suitable for building enterprise-grade AI solutions that require integration with existing codebases and complex orchestration of LLM capabilities |

All three tools are open-source projects, offering flexibility and cost-effectiveness for developers.

Choosing the Right Tool

The best choice depends on your specific needs and priorities:

- PromptFlow: Ideal for beginners and those who prefer a visual approach. Its streamlined workflow makes it easy to get started and iterate quickly.

- LangChain: Best for developers comfortable with code and who need flexibility to integrate with various tools and data sources.

- Semantic Kernel: Suited for complex projects that require integrating LLMs with existing code and business logic.

Learning Resources

- PromptFlow: Prompt flow — Prompt flow documentation

- Semantic Kernel: Create AI agents with Semantic Kernel

PromptFlow is a powerful toolkit developed by Microsoft that streamlines the entire process of building LLM-powered applications. It offers a comprehensive suite of features that simplify development, testing, and deployment, making it an ideal choice for creating high-quality LLM apps.

Key Features and Benefits:

- Visual Workflow Design: PromptFlow provides a user-friendly interface for designing LLM workflows. You can visually connect different components, such as prompts, LLMs, and Python code, to create complex interactions. This visual approach makes it easier to understand and manage the flow of your application.

- Simplified Prompt Engineering: Prompt engineering is crucial for effective LLM interaction. PromptFlow simplifies this process by providing tools for creating, testing, and refining prompts. You can experiment with different prompt variations and see their impact on the LLM’s output.

- Streamlined Testing and Evaluation: Evaluating the performance of your LLM app is essential. PromptFlow offers built-in tools for testing and evaluating your workflows. You can run your flows with different inputs, analyze the outputs, and measure key metrics to ensure your application meets your requirements.

- Easy Deployment: Once your LLM app is ready, PromptFlow simplifies deployment. You can deploy your flows to various platforms, including Azure AI Studio, making it easy to integrate your application into your existing infrastructure.

- Enhanced Collaboration: PromptFlow facilitates collaboration among developers. You can share your workflows with others, allowing for feedback and collaborative development. This can help improve the quality and efficiency of your LLM app development process.

Use Cases:

PromptFlow is well-suited for a wide range of LLM applications, including:

- Chatbots and Conversational AI: Build interactive and engaging chatbots that can understand and respond to user queries in a natural and informative way.

- Question Answering Systems: Create systems that can accurately answer questions based on a given context or knowledge base.

- Text Summarization and Analysis: Develop applications that can summarize lengthy documents or analyze text to extract key insights.

- Code Generation and Assistance: Build tools that can assist developers by generating code snippets or providing suggestions.

Getting Started:

To start using PromptFlow, you can install the PromptFlow extension for Visual Studio Code or access it through Azure AI Studio. Microsoft provides comprehensive documentation and tutorials to help you get started quickly.

References:

- Prompt flow documentation: https://microsoft.github.io/promptflow/

- Prompt flow in Azure AI Foundry portal: https://learn.microsoft.com/en-us/azure/ai-studio/how-to/prompt-flow

- Operationalize Your Prompt Engineering Skills with Azure Prompt Flow for Smarter LLM Applications: https://techcommunity.microsoft.com/blog/educatordeveloperblog/operationalize-your-prompt-engineering-skills-with-azure-prompt-flow/4285171

- Get started with prompt flow to develop Large Language Model (LLM) apps: https://www.youtube.com/watch?v=02GJxwnajis

By leveraging PromptFlow’s capabilities, developers can simplify the process of building, testing, and deploying high-quality LLM applications, unlocking the full potential of this transformative technology.

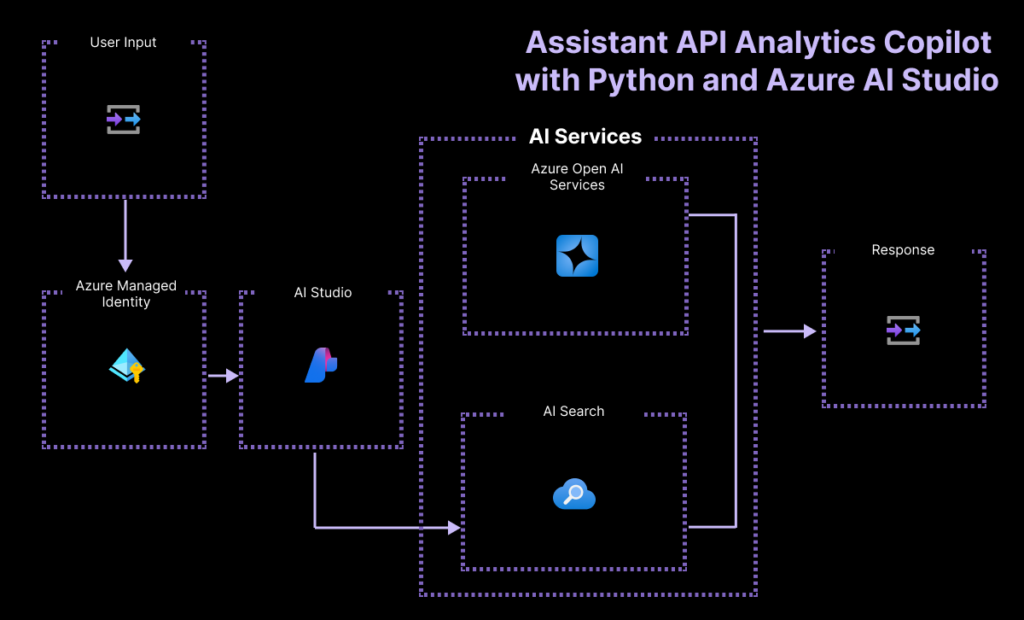

This code sample https://github.com/Azure-Samples/assistant-data-openai-python-promptflow demonstrates building a data analytics chatbot using Python and PromptFlow. The chatbot leverages the Assistants API to answer natural language questions by interpreting them as queries on a sales dataset.

Tips about Langchain?

LangChain is a powerful and versatile framework designed to simplify the development of applications that leverage large language models (LLMs). Its strength lies in its modularity and ability to seamlessly integrate LLMs with various tools and data sources, enabling the creation of complex and sophisticated LLM-driven applications.

Key Features and Benefits:

- Modular Components: LangChain provides a wide range of modular components that can be easily combined and customized to build diverse LLM applications. These components include:

- Models: Interfaces with various LLMs, chat models, and text embedding models.

- Prompts: Tools for creating and managing prompts, including templating and prompt chains.

- Memory: Short-term and long-term memory modules to maintain context and history in LLM interactions.

- Chains: Sequences of calls that link different components together to create complex workflows.

- Agents: Decision-making components that determine the next action in a workflow based on the LLM’s output.

- Indexes: Efficiently access and retrieve information from external data sources.

- Flexible Integration: LangChain excels at integrating LLMs with other tools and services. It provides integrations with:

- Data Sources: Connect to various data sources, including databases, APIs, and cloud storage.

- Development Tools: Integrate with popular development tools and frameworks.

- External Services: Connect to external services like vector databases, search engines, and code interpreters.

- Chain Creation: LangChain’s core functionality revolves around creating chains that link together different components. This allows for complex LLM interactions, where the output of one step can be used as input for the next.

- Customization: LangChain is highly customizable, allowing developers to tailor the framework to their specific needs. This includes creating custom components, modifying existing ones, and defining unique workflows.

- Active Community: LangChain boasts a vibrant and active community of developers and users. This ensures continuous improvement, frequent updates, and readily available support.

Use Cases:

LangChain is well-suited for a variety of LLM applications, including:

- Chatbots and Conversational AI: Build sophisticated chatbots that can engage in complex conversations, access external information, and perform actions.

- Question Answering Systems: Create advanced question-answering systems that can retrieve information from multiple sources and provide comprehensive answers.

- Text Summarization and Paraphrasing: Develop applications that can summarize complex texts or rephrase them in different styles.

- Code Generation and Debugging: Build tools that can generate code, assist with debugging, and provide code explanations.

Getting Started:

LangChain is available as a Python package and can be easily installed using pip. The official documentation provides comprehensive guides, tutorials, and examples to help you get started.

References:

- LangChain Documentation: https://python.langchain.com/docs/introduction/

- LangChain GitHub Repository: https://github.com/hwchase17/langchain

- LangChain Tutorials: https://python.langchain.com/docs/tutorials/

- Building a Simple LLM Application with Langchain: https://www.scalablepath.com/machine-learning/langchain-tutorial

By leveraging LangChain’s modularity, flexibility, and extensive integrations, developers can create powerful and innovative LLM-powered applications that address a wide range of real-world challenges.

Semantic Kernel (SK) is an open-source SDK from Microsoft designed to make it easier for developers to integrate Large Language Models (LLMs) like OpenAI’s GPT models into their applications. It acts as a bridge between the world of LLMs and traditional programming, allowing you to combine the strengths of both.

Key Features and Benefits:

- AI Orchestration: SK excels at orchestrating complex workflows that involve both AI capabilities (like text generation, summarization, translation) and your own custom code or business logic. It allows you to chain together different AI “skills” with your existing functions and APIs.

- Plugins: SK uses a plugin architecture to organize and manage AI capabilities. Plugins encapsulate prompts, LLMs, and native functions, making it easy to add, remove, and reuse them in your applications.

- Planners: For more dynamic scenarios, SK offers “planners” that can automatically generate plans to achieve a user’s goal by leveraging function calling. This allows for more flexible and adaptable AI interactions.

- Memory: SK provides memory capabilities to store context and embeddings, which can be used to provide LLMs with relevant information and improve their responses.

- Connectors: SK includes connectors to popular LLMs like OpenAI, Azure OpenAI, and Hugging Face, making it easy to switch between different models.

- Extensibility: SK is designed to be extensible, allowing you to create custom plugins, connectors, and planners to meet your specific needs.

How it Works:

- Define Plugins: You create plugins that encapsulate AI “skills” (like summarizing text or translating languages) using prompts and LLMs.

- Orchestrate with Kernel: The SK kernel acts as the orchestrator, managing the execution of plugins and the flow of data between them.

- Integrate with Your Code: You can seamlessly integrate SK with your existing code, allowing LLMs to interact with your functions, data, and APIs.

Use Cases:

- Chatbots and Assistants: Build intelligent chatbots that can understand complex requests, access external information, and perform actions.

- Content Generation: Automate content creation tasks like writing articles, generating reports, or creating marketing materials.

- Code Generation: Assist developers with code generation, debugging, and documentation.

- Business Process Automation: Automate complex business processes by integrating AI with existing workflows.

Getting Started:

SK is available for C#, Python, and Java. You can find the SDK on GitHub and detailed documentation on Microsoft Learn.

References:

- Semantic Kernel GitHub Repository: https://github.com/microsoft/semantic-kernel

- Semantic Kernel Documentation: https://learn.microsoft.com/en-us/semantic-kernel/overview/

- Blog Post: Combine AI Models & Custom Software with Microsoft’s Semantic Kernel: https://adamcogan.com/2023/09/10/ai-models-with-microsofts-semantic-kernel/

- Blog Post: Semantic Kernel Learnings: https://devblogs.microsoft.com/ise/semantic-kernel-learnings/

By combining the power of LLMs with traditional programming logic, Semantic Kernel provides a robust and flexible framework for building the next generation of AI-powered applications.

LangGraph: Building Stateful Language Agents as Graphs

LangGraph is a powerful library designed for constructing stateful, multi-actor applications powered by Large Language Models (LLMs). It’s specifically geared towards creating intricate agent and multi-agent workflows, offering unique advantages over traditional LLM frameworks.

Key Features and Benefits:

- Cycles and Branching: Unlike Directed Acyclic Graphs (DAGs), LangGraph supports cycles within your application flows. This is essential for building agentic architectures that require iterative processing and decision-making.

- Persistence: LangGraph automatically saves the state of your application after each step in the graph. This enables features like:

- Error recovery: Resume execution from a saved checkpoint.

- Human-in-the-loop: Pause execution for human intervention or approval.

- Time-travel debugging: Inspect past states to understand the flow of execution.

- Controllability: As a low-level framework, LangGraph provides fine-grained control over both the flow and state of your application. This is crucial for building reliable and predictable agents.

- Integration with LangChain: LangGraph seamlessly integrates with the broader LangChain ecosystem, including tools, agents, and the LangSmith platform for debugging and monitoring.

- LangGraph Platform: This commercial platform built on the open-source LangGraph framework provides infrastructure for deploying and managing LangGraph agents in production. It offers features like:

- LangGraph Server: APIs for interacting with your deployed agents.

- LangGraph SDKs: Client libraries for accessing the server APIs.

- LangGraph CLI: Command-line tools for building and deploying agents.

- LangGraph Studio: A UI for debugging and visualizing your agent workflows.

Why Choose LangGraph?

LangGraph is particularly well-suited for building complex, stateful LLM applications that require:

- Agentic behavior: Applications where the LLM needs to make decisions, take actions, and interact with its environment.

- Multi-actor scenarios: Applications involving multiple agents collaborating or competing.

- Human-in-the-loop: Applications where human intervention is required at certain points in the workflow.

- Long-running processes: Applications that need to maintain state over extended periods.

Getting Started:

- Installation:

pip install -U langgraph - Documentation: Explore the comprehensive documentation, including tutorials, how-to guides, and API references, on the LangChain website.

- LangChain Academy: Take the free “Introduction to LangGraph” course on LangChain Academy for a guided learning experience.

References:

- LangGraph GitHub Repository: https://github.com/langchain-ai/langgraph

Advantages of LangGraph:

- Cycles and Statefulness: The ability to handle cycles and maintain state is a major advantage for building complex, agentic applications that go beyond simple linear workflows.

- Fine-grained Control: LangGraph gives you low-level control over the flow and state, allowing for precise customization of agent behavior.

- Persistence: Built-in persistence simplifies error recovery, human-in-the-loop interactions, and debugging.

- Integration with LangChain: LangGraph works seamlessly within the LangChain ecosystem, leveraging its tools, agents, and the LangSmith platform.

- LangGraph Platform: The commercial platform offers robust infrastructure for deploying and managing agents in production, including features like streaming support, background runs, and human-agent collaboration.

Disadvantages of LangGraph:

- Relative Newness: Being a newer library, it might have a smaller community and fewer readily available resources compared to more established tools like LangChain’s core modules.

- Learning Curve: The low-level nature of LangGraph might present a steeper learning curve for some developers, especially those new to graph-based programming or stateful agent design.

- Limited Language Support: Currently, LangGraph primarily supports Python. While there are plans to expand language support, this might be a limitation for some developers.

- Platform Dependency: While the core LangGraph library is open-source, some of the advanced features and production-ready capabilities rely on the commercial LangGraph Platform, which might involve costs.

Overall:

LangGraph is a promising tool for building sophisticated LLM-powered agents. Its strengths lie in its ability to handle complex, stateful workflows and provide fine-grained control. However, its relative newness and potential learning curve are factors to consider.

If you’re looking to build advanced language agents with complex behaviors and interactions, LangGraph is definitely worth exploring. If you’re new to LLM development or prefer a simpler approach, starting with LangChain’s core modules might be a more gradual introduction.

Conclusion

PromptFlow, LangChain, and Semantic Kernel each offer unique strengths for developing LLM-based applications. PromptFlow excels in prompt engineering and visual flow orchestration, while LangChain provides a modular and extensible framework with strong memory management and a simplified learning curve for beginners37. Semantic Kernel focuses on integrating LLMs with conventional programming languages and offers powerful AI orchestration capabilities, making it well-suited for enterprise-grade solutions37. The choice of tool depends on the specific needs of the project, preferred programming languages, and desired level of integration and flexibility.

It’s important to recognize that these tools are not mutually exclusive. In fact, combining them can often lead to more robust and versatile solutions. For instance, PromptFlow’s strength in prompt engineering can be complemented by LangChain’s modularity and diverse integrations or Semantic Kernel’s AI orchestration capabilities for more complex scenarios18. LangGraph can be integrated with LangChain to build agents that can interact with their environment and maintain state over time.

As the field of LLM development continues to evolve, we can expect these tools to mature further, offering even more sophisticated features and integrations. The future likely holds more seamless integration between LLMs and traditional programming paradigms, enabling developers to build increasingly intelligent and complex AI applications. This continuous evolution will empower developers to push the boundaries of what’s possible with LLMs, leading to innovative solutions across various domains.

References

1. Prompt flow documentation – Microsoft Open Source: https://microsoft.github.io/promptflow/

2. learn.microsoft.com: https://learn.microsoft.com/en-us/azure/ai-studio/how-to/prompt-flow

3. Azure’s PromptFlow: Deploying LLM Applications in Production – Advancing Analytics: https://www.advancinganalytics.co.uk/blog/2023/10/30/azure-machine-learning-prompt-flow-a-comprehensive-guide

4. Prompt flow in Azure AI Foundry portal – Microsoft Learn: https://learn.microsoft.com/en-us/azure/ai-studio/how-to/prompt-flow

5. What is Azure Machine Learning prompt flow – Microsoft Learn: https://learn.microsoft.com/en-us/azure/machine-learning/prompt-flow/overview-what-is-prompt-flow?view=azureml-api-2

6. promptflow-azure – PyPI: https://pypi.org/project/promptflow-azure/

7. C# Flow Creation · Issue #1317 · microsoft/promptflow – GitHub: https://github.com/microsoft/promptflow/issues/1317

8. Building your own copilot – yes, but how? (Part 2 of 2) – Microsoft Tech Community: https://techcommunity.microsoft.com/blog/azuredevcommunityblog/building-your-own-copilot-%E2%80%93-yes-but-how-part-2-of-2/4112801

9. microsoft/promptflow-resource-hub – GitHub: https://github.com/microsoft/promptflow-resource-hub

10. microsoft/promptflow: Build high-quality LLM apps – from prototyping, testing to production deployment and monitoring. – GitHub: https://github.com/microsoft/promptflow

11. MIT License – microsoft/promptflow – GitHub: https://github.com/microsoft/promptflow/blob/main/LICENSE

12. Promptflow Azure Prompt Flow Pricing – Restack: https://www.restack.io/p/promptflow-azure-prompt-flow-answer-pricing-cat-ai

13. Azure AI Foundry – Pricing: https://azure.microsoft.com/en-us/pricing/details/ai-foundry/

14. python.langchain.com: https://python.langchain.com/docs/introduction/#:~:text=LangChain%20is%20a%20framework%20for,components%20and%20third%2Dparty%20integrations.

15. Introduction | 🦜️ LangChain: https://python.langchain.com/docs/introduction/

16. What Is LangChain? A Complete Comprehensive Overview – DataStax: https://www.datastax.com/guides/what-is-langchain

17. What Is LangChain? Features, Advantages, and How to Begin – Trantorinc: https://www.trantorinc.com/blog/what-is-langchain

18. Comparison of Prompt Flow, Semantic Kernel, and LangChain for AI: https://www.advancinganalytics.co.uk/blog/2024/3/25/comparison-of-prompt-flow-semantic-kernel-and-langchain-for-ai-development

19. What is LangChain? – AWS: https://aws.amazon.com/what-is/langchain/

20. What is LangChain? A Comprehensive Guide to the Framework – Data Science Dojo: https://datasciencedojo.com/blog/what-is-langchain/

21. LangChain – Wikipedia: https://en.wikipedia.org/wiki/LangChain

22. What is the LangChain Framework? + Example | by firstfinger – Medium: https://medium.com/@firstfinger/what-is-langchain-framework-example-2ece7242127d

23. Understanding the LangChain Framework | by TechLatest.Net: https://medium.com/@techlatest.net/understanding-the-langchain-framework-8624e68fca32

24. The LangChain Community: https://www.langchain.com/community

25. LangSmith Pricing – LangChain: https://www.langchain.com/pricing-langsmith

26. learn.microsoft.com: https://learn.microsoft.com/en-us/semantic-kernel/

27. Introduction to Semantic Kernel | Microsoft Learn: https://learn.microsoft.com/en-us/semantic-kernel/overview/

28. microsoft/semantic-kernel: Integrate cutting-edge LLM technology quickly and easily into your apps – GitHub: https://github.com/microsoft/semantic-kernel

29. How to create a GenAI agent using Semantic Kernel | Nearform: https://www.nearform.com/digital-community/how-to-create-a-genai-agent-using-semantic-kernel/

30. Understanding Semantic Kernel: A Comprehensive Guide – Greg Dziedzic: https://gregdziedzic.com/understanding-semantic-kernel-a-comprehensive-guide/

31. Semantic Kernel 101 – CODE Magazine: https://www.codemag.com/Article/2401091/Semantic-Kernel-101

32. Introduction to Semantic Kernels: What They Are and Why They Matter? – NashTech Blogs: https://blog.nashtechglobal.com/introduction-to-semantic-kernels-what-they-are-and-why-they-matter/

33. Supported languages for Semantic Kernel | Microsoft Learn: https://learn.microsoft.com/en-us/semantic-kernel/get-started/supported-languages

34. geffzhang/awesome-semantickernel: Awesome list of tools and projects with the awesome semantic kernel framework – GitHub: https://github.com/geffzhang/awesome-semantickernel

35. Category: Ecosystem from Semantic Kernel – Microsoft Developer Blogs: https://devblogs.microsoft.com/semantic-kernel/category/ecosystem/

36. MIT license – microsoft/semantic-kernel – GitHub: https://github.com/microsoft/semantic-kernel/blob/main/LICENSE

37. PromptFlow vs LangChain vs Semantic Kernel: https://techcommunity.microsoft.com/blog/educatordeveloperblog/llm-based-development-tools-promptflow-vs-langchain-vs-semantic-kernel/4149252

Leave a Reply